The ZK Chronicles: Sum Check

Now that we’re equipped with finite fields and polynomials, it’s time to take a look at our very first proving system. Yay!

Truth is, the tool we’re about to present might feel a bit pointless at first glance. When studied in isolation, it will feel like an extremely convoluted way to perform a very simple task.

Don’t let that discourage you though! As it happens, this tool is extremely important for a wonderful reason: many problems we want to prove things about can be reduced to a version of what we’re about to unveil.

Therefore, I ask you to approach today’s article with an open mind. Think of today’s topic as just another stepping stone in our path to obtaining useful proving systems.

Things will start clicking in very soon, I promise. Trust the process!

Alright then! Expectations are high, so let’s waste no time. Let’s talk about the sum-check protocol.

Getting Started

As a short reminder of our very first encounter, what we want to do is build some sort of proving system to show that the result of some computation is valid, and do so in less time than the computation itself, ideally.

Cool, so... What kind of computation are we talking about?

So far, our toolkit only includes polynomials. Using these as a basis, we could try to come up with ways of representing useful computations — the kind we can prove things about.

Okay then, let’s start small. Take some univariate polynomial . Perhaps the simplest thing we can do with it is to evaluate it at some point. And on that account, I want to note that there are two special points with an interesting behavior upon evaluation: and .

Think about it for a second: when , all terms containing just vanish, leaving us with the term with no . This is usually called the constant term, or the zeroth coefficient.

Likewise, evaluating a polynomial at simply gives us the sum of all the coefficients in the polynomial!

Essentially, these points are nice because we don’t need to spend time calculating any powers of during evaluation. Granted — we could use any other point, but these just happen to be convenient, and very important for today’s construction.

I know. Not the most fun stuff, but it’s a start.

The next thing we might try is adding evaluations together. For instance, we could have:

Where is some field element. Performing this calculation directly is quite easy for the reasons we stated before: evaluation is very straightforward. It seems we’re not really making much progress — after all, we said that proving a result makes sense only when there’s a real gain in verification time versus computation effort.

And here, computation is so simple that it’s kind of absurd to try and build a separate algorithm for verification.

I mean, just look at the expression above!

So how about we kick it up a notch?

Turning Up the Heat

What would happen if our starting point was a multivariate polynomial instead? Something like this:

Now we’re talking.

In terms of evaluation complexity, we’re pretty much in the same situation as before: it’s quite simple to evaluate these polynomials at variable values and .

But the situation changes when we consider combinatorics. Since we now have variables, and each can be either or , we now have possible combinations to evaluate!

These points, represented as the set , form what’s called the boolean hypercube.

Calculating this sum does indeed take some more work:

In Big O terms, we can say that calculating takes exponential time . Meaning it takes at least evaluations.

I’ll assume familiarity with this notation, but as a short explainer, what it does is express complexity based on the term in an expression that grows the fastest.

As variable values increase, said term will grow bigger (more significant) than others, rendering every other term negligible in comparison.

So for example, take . As grows larger, grows much more rapidly than — and soon enough, will be much bigger than . Thus, we say the complexity of the expression is . Notice we ignore multiplicative factors, as they don’t affect the behavior of the function — they only scale it.

For very large values of , exponential time can be a really long time.

Prohibitively long for my poor computer, but maybe not for someone with some heavy-duty hardware.

In this context, it does make sense to try and fabricate an algorithm for fast verification of some claimed result.

And this is exactly the goal of the sum-check protocol!

Let’s see how it works.

The Protocol

A protocol is simply a sequence of steps, defined by rules that must be followed during an interaction between parties. This is exactly our situation: a prover and a verifier will interact in a very specific manner.

The beauty of this protocol we’re about to present lies in its recursive nature.

Here’s the plan: we’ll first explain how a single round works. Once we finish with that, it will be immediately clear that to continue moving forward, we’ll have to do another one of those rounds. Each round will sort of reduce the problem, until we’re left with a condition that’s very simple to check.

Easy, right?

Woah, woah, chill... Okay, one step at a time.

A Sum-Check Round

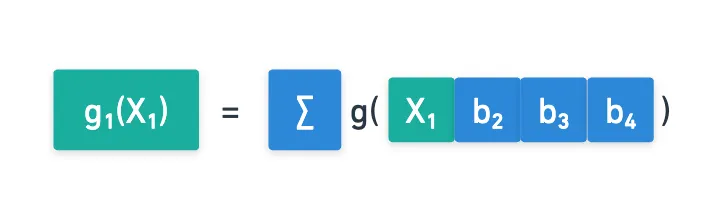

The setup is the following: a prover claims to have calculated the sum for a polynomial over . Their goal is to convince a verifier that is the correct result.

To do so, the prover will do something quite strange in appearance: a partial sum of .

If you look closely, you’ll notice that we have not included evaluations for the first variable, . For this reason the result is a new polynomial — a univariate one, specifically.

Believe it or not, this is all they have to do. The prover can send and to the verifier, and with these values, the verifier can easily check the following equality:

Clearly, if is correctly calculated, this equality should hold (feel free to check that yourself if you’re not convinced!). So if this test passes, we’re done! The verifier can confidently say that is correct!

Or... Can they?

Problems in Paradise

In fact, there’s a critical issue with this reasoning — and it’s a consideration we need to always keep in mind as we move forward in our journey. We’ve said it before, but I’ll say it again, and in big letters, so you guys don’t ever forget:

![]()

![]() The prover could be lying

The prover could be lying![]()

![]()

What could they be lying about? Well, just about everything they can lie about — but in this case, they don’t have many options: they either don’t know , or they lie about the value of .

So, let’s say the prover wants to convince the verifier that some value is the correct result, but is in fact incorrect. How would they go about it?

Pretty much the only knob they can control is that polynomial . And if you think about it, nothing stops the prover from crafting a simple polynomial so that the verifier check passes!

It wouldn’t even be that hard!

As things are right now, the algorithm won’t work. We’re gonna need a way to check that was correctly calculated.

Let’s take a moment to ponder our options. Naturally, verifiers can’t trust a prover’s word. And is just a polynomial — all we can do with it is evaluate it at some point.

Wait... What happens if we evaluate at some random point ?

Do you see it? A little miracle has unfolded before our eyes: we’re back where we started!

Yeah, sorry. Let me explain.

Once we choose and fix a random point , the evaluation is actually a sum over a reduced boolean hypercube. It's as if we're replacing the original function for another function with one less variable:

Now, we can ask the same original question: can we prove this reduced version of sums up to over a reduced boolean hypercube?

And the magic, my friends, is that another round of sum-checking does the trick!

The Full Picture

What I’m getting at here is that we can do this over and over in a recursive manner, reducing the number of variables in by one on every round. Therefore, after rounds, we’ll be left with a single evaluation of .

Before tying things up, I believe seeing this in action with a simple example will help a lot.

Take for instance this polynomial with variables:

This polynomial has to be defined over some finite field, so let’s choose a simple one, like .

First, the prover needs to compute the sum over the boolean hypercube .

That’s a total of evaluations added together!

Feel free to do the calculation yourself — but in the spirit of seeing the proving system in action, let’s say the prover claims the result to be .

The prover also sends the polynomial , and claims it to be . The verifier then simply checks:

Okay, first round, done. Now the verifier picks a random value . Let’s suppose that value is .

The prover then calculates , which happens to yield . So now, the prover has to calculate this polynomial here:

The result of this is .

And we can easily calculate this because we, as provers, know the original .

If we didn’t know , then this random selection of would already make life hard for us! This type of “challenge” interaction is very important, and we’ll formalize it later.

Cool! We now check whether:

Although the result seems to be wrong (we get ), everything clicks once we remember we're working on a finite field, so .

For the third and fourth rounds, we just rinse and repeat. To keep things brief, I'll just summarize the steps:

- Verifier chooses .

- Prover responds with , and .

- Verifier checks , and chooses .

- Prover responds with and .

- Verifier checks , and chooses .

The full process looks like this:

Everything checks out nicely so far. But we have a little problem: we’ve run out of variables!

Closing the Loop

Right — the original polynomial cannot be reduced any further. The verifier has received , and they need to check whether (which in this case, equals ) is truly the same result as evaluating .

Since we cannot perform another round, it looks like we don’t have any options to check the validity of this last value. And this is not cool: what if the value is just made up so that everything works?

At this point, we’re gonna need a different strategy.

We need a mechanism to verify that is correct. Note that . If there was some magical way to safely evaluate at that input, then we could compare with , and nicely close the procedure.

Sadly though... It’s too early in our journey to see how this can be achieved mathematically.

Rather than asking you to run on trust me bro vibes though, I propose the following: let’s put a name on this, so that we don’t forget about it. And when the time comes, we can circle back to this concept, and truly tie everything together.

We’ll say that we have oracle access to . By this, we mean that we have a way to evaluate at some random point, and we’re guaranteed to have a correct value.

So finally, after all the checks in every round, the verifier performs an oracle query of at , and accepts the proof if the value matches .

Of course, this whole procedure can be generalized to any number of variables — and the time savings become more relevant the more variables there are!

And that's the sum-check protocol!

Completeness and Soundness

It’s a very elegant algorithm, that’s for sure. I know it might feel like a little too much at first, so encourage you to take your time to let it sink in before moving forward.

With these proving methods though, we need to be precise — meaning that for us to confidently say “yeah, this works”, we need to formally show that the algorithm is both complete and sound.

This will become standard practice for any algorithm we learn about from here on. Since it’s our first time presenting an algorithm in this series, let’s briefly recap what these properties mean:

- Completeness means that the verifier will always accept the proof if the original statement is valid (the sum of over the boolean hypercube is in fact ), except perhaps with negligible probability.

- Soundness means that it’s very hard for a dishonest prover to generate a valid proof, except with negligible probability.

Soundness actually comes in various flavors, but we’ll defer that discussion for later.

Completeness is pretty straightforward: if the prover does indeed know , and has correctly computed , then they can pass every round by simply building each correctly according to the protocol. This is, since they know , they should have no problem whatsoever to provide responses to each challenge.

Soundness is a bit more tricky to show. Essentially, we need to gauge how hard it would be for a prover to cheat.

To do this, let’s actually follow through a cheating attempt. Suppose the prover doesn’t know , and instead they claim the sum of over the boolean hypercube is . Or even worse: they are interested in convincing us that the result is correct for their own benefit!

How could they fool a verifier?

Well, in the first round, they’d would need to send some polynomial such that:

Now, this is where it gets interesting: they cannot use to calculate , because the sum would be (most likely) different than . Thus, they’d need to craft so that the first check passes.

In other words, and the real are different polynomials. So when the verifier chooses their first challenge , it’s very unlikely that and are the same value.

We can actually know exactly how unlikely this is. In the spirit of keeping things progressive, I think it’s better to leave that discussion for later. What I will say though is that you have all the information you need for this endeavor in the previous article.

So if you want to think about it, be my guest! If not, don’t worry — we’ll circle back to this.

It would be super unfortunate if and happen to coincide. If that’s the case, the prover can continue with their lie, only that this time they could in theory respond to every subsequent round with valid polynomials: they just calculate normally.

We can apply this same reasoning to every round in the same recursive fashion: to pass round , the prover needs to build so that it passes the next check, and they would have to get lucky on the next challenge ().

If the probability of this happening is some value , then a cheating prover has chances of getting lucky (or rolls in total), so the total probability of a successful heist would be . As long as this combined value is small, then the algorithm is sound.

Lastly, the final oracle query allows the verifier to catch the prover in their final lie (unless they get lucky, of course).

So there you have it! The sum-check protocol fulfills the completeness and soundness requirements.

Summary

We’ve just seen our first verifiable computing algorithm. It might not seem like much for now, but it’s a start. And we’ve seen some valuable tools and concepts in the process such as recursion, verifier challenges, clever polynomial evaluations, oracle queries, and even how to check completeness and soundness!

I have intentionally skipped over the computational costs of this protocol, because I want us to focus on the math for now. If you’re curious though, you can easily calculate that yourself — and you’ll find three things:

- The prover runs in time.

- The verifier runs in time (linear).

- There are a total of communication steps, and the total number of field elements sent during the entire protocol is .

Clearly, the verifier is much faster than the prover as becomes larger. But some questions may arise around this: is linear time good enough? Does it matter if the prover takes too long?

And especially, what about the communication overhead? The verifier might run faster, but if it has to wait for the prover, then the whole process is slowed down!

Well... Yeah. In its current form, this is not a very practical protocol.

I warned you though!

Let’s just say it has potential.

We’ll start piecing together the importance of the sum-check protocol in the next few articles. And for that, we’ll want to jump onto other important ideas, especially around how to represent statements and computation in general.

That will be the topic for the next one!

Did you find this content useful?

Support Frank Mangone by sending a coffee. All proceeds go directly to the author.